Introduction & Overview

Fuzz testing, or fuzzing, is a dynamic testing technique used to identify vulnerabilities in software by injecting unexpected, malformed, or random inputs. In the context of DevSecOps, which emphasizes integrating security practices into the development and operations lifecycle, fuzz testing plays a critical role in proactively identifying and mitigating security flaws before they can be exploited. This tutorial provides an in-depth exploration of fuzz testing, tailored for DevSecOps practitioners, covering its concepts, implementation, use cases, benefits, limitations, and best practices.

What is Fuzz Testing?

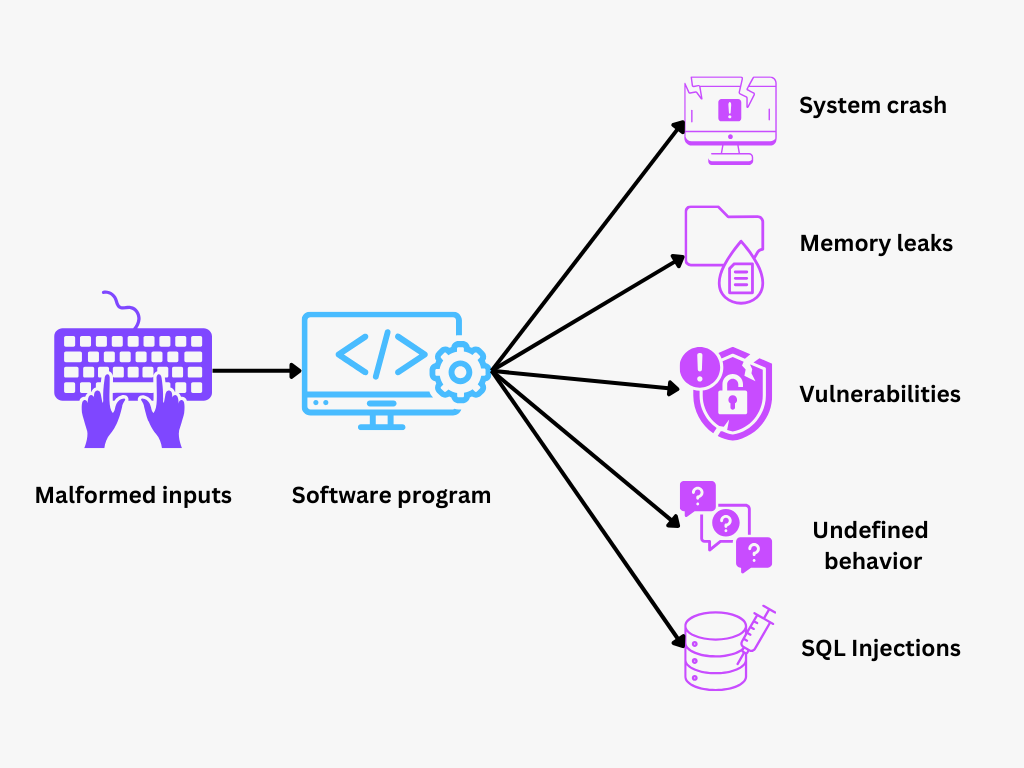

Fuzz testing involves sending invalid, unexpected, or random data to a system to uncover defects such as crashes, memory leaks, or security vulnerabilities. It is particularly effective for testing software resilience against edge cases that manual testing might miss.

- Purpose: Identify bugs, vulnerabilities, and unexpected behavior in applications.

- Key Characteristics:

- Automated input generation.

- Focus on robustness and security.

- Applicable to various systems, including APIs, network protocols, and file parsers.

History or Background

Fuzz testing originated in the late 1980s when Professor Barton Miller at the University of Wisconsin conducted experiments to test UNIX utilities by feeding them random inputs, revealing surprising failure rates. Since then, fuzzing has evolved significantly:

- 1990s: Early tools like

fuzzfocused on simple random input generation. - 2000s: Introduction of coverage-guided fuzzing (e.g., AFL, American Fuzzy Lop) improved efficiency by tracking code paths.

- 2010s–Present: Integration with CI/CD pipelines and cloud-based testing platforms, driven by DevSecOps adoption, has made fuzzing a standard security practice.

Why is it Relevant in DevSecOps?

In DevSecOps, security is embedded throughout the software development lifecycle (SDLC). Fuzz testing aligns with this philosophy by:

- Proactive Vulnerability Detection: Identifies issues early in development, reducing remediation costs.

- Automation: Fits seamlessly into automated CI/CD pipelines, enabling continuous security testing.

- Compliance: Helps meet regulatory requirements (e.g., OWASP, PCI-DSS) by identifying vulnerabilities like buffer overflows or injection flaws.

- Scalability: Adapts to cloud-native and microservices architectures, critical in modern DevSecOps environments.

Core Concepts & Terminology

Key Terms and Definitions

- Fuzzer: A tool or framework that generates and injects test inputs into a target system.

- Input Corpus: A set of initial valid inputs used as a starting point for generating fuzzed inputs.

- Mutation-Based Fuzzing: Modifies valid inputs to create malformed ones (e.g., flipping bits, adding random bytes).

- Generation-Based Fuzzing: Creates inputs from scratch based on a specification or model.

- Coverage-Guided Fuzzing: Uses code coverage metrics to guide input generation, maximizing the exploration of code paths.

- Crash: An unexpected program termination caused by invalid input, often indicating a potential vulnerability.

- Seed: An initial input used to generate fuzzed inputs.

How It Fits into the DevSecOps Lifecycle

Fuzz testing integrates into various DevSecOps phases:

- Plan: Define fuzzing requirements and select target components (e.g., APIs, parsers).

- Code: Run fuzz tests on code changes during development to catch vulnerabilities early.

- Build: Integrate fuzzing into CI/CD pipelines to test builds automatically.

- Test: Perform fuzzing alongside other security tests (e.g., SAST, DAST).

- Deploy: Validate production-ready components with fuzzing to ensure robustness.

- Monitor: Use fuzzing to test live systems for new vulnerabilities post-deployment.

Architecture & How It Works

Components and Internal Workflow

A fuzz testing system typically includes:

- Input Generator: Creates random or mutated inputs based on a corpus or specification.

- Target Application: The software or component being tested (e.g., API, library, or binary).

- Execution Engine: Runs the target with fuzzed inputs and monitors behavior.

- Crash Analyzer: Detects crashes, logs issues, and identifies potential vulnerabilities.

- Feedback Loop: In coverage-guided fuzzing, uses code coverage data to refine inputs.

Workflow:

- Initialize with a seed corpus or specification.

- Generate fuzzed inputs (mutation or generation-based).

- Execute the target application with these inputs.

- Monitor for crashes, timeouts, or anomalies.

- Analyze crashes to identify vulnerabilities.

- Refine inputs based on feedback (if coverage-guided).

Architecture Diagram Description

Imagine a diagram with the following components:

- Input Corpus (top left): A repository of valid inputs (e.g., JSON files, network packets).

- Fuzzer Engine (center): Contains the Input Generator, Mutation Logic, and Coverage Tracker, connected to the Input Corpus.

- Target Application (right): A box representing the software under test, receiving inputs from the Fuzzer Engine.

- Crash Analyzer (bottom): Collects crash logs and vulnerability reports, with an arrow looping back to the Fuzzer Engine for feedback.

- CI/CD Pipeline (background): A pipeline flow (Plan → Code → Build → Test → Deploy) showing fuzzing integration at the Test stage.

Integration Points with CI/CD or Cloud Tools

- CI/CD Integration:

- Tools like Jenkins or GitLab CI/CD can trigger fuzzing jobs on code commits.

- Example: Use a fuzzing tool like AFL or libFuzzer in a GitHub Actions workflow.

- Cloud Tools:

- AWS CodePipeline: Run fuzz tests in a dedicated testing stage.

- Google Cloud Build: Use containerized fuzzers for scalable testing.

- Kubernetes: Deploy fuzzers as pods for distributed testing of microservices.

Installation & Getting Started

Basic Setup or Prerequisites

To start fuzz testing, you need:

- A fuzzing tool (e.g., AFL, libFuzzer, or OSS-Fuzz).

- A target application or component to test.

- A development environment with compilers (e.g., GCC, Clang) for instrumentation.

- Optional: A CI/CD system for integration (e.g., Jenkins, GitHub Actions).

System Requirements:

- OS: Linux (preferred for most fuzzers), macOS, or Windows (with WSL for some tools).

- Dependencies: Python, CMake, or specific libraries (depending on the fuzzer).

- Hardware: Multi-core CPU and sufficient RAM (8GB+) for efficient fuzzing.

Hands-On: Step-by-Step Beginner-Friendly Setup Guide

This guide demonstrates setting up AFL++ (an advanced version of American Fuzzy Lop) on Ubuntu to fuzz a simple C program.

- Install Dependencies:

sudo apt-get update

sudo apt-get install -y build-essential clang llvm python3 git2. Clone and Build AFL++:

git clone https://github.com/AFLplusplus/AFLplusplus.git

cd AFLplusplus

make

sudo make install3. Create a Sample C Program (test.c):

#include <stdio.h>

#include <string.h>

int main(int argc, char *argv[]) {

char buffer[10];

if (argc > 1) {

strcpy(buffer, argv[1]); // Vulnerable to buffer overflow

printf("Input: %s\n", buffer);

}

return 0;

}4. Compile the Program with AFL Instrumentation:

afl-clang-fast -o test test.c5. Create an Input Corpus:

mkdir inputs

echo "hello" > inputs/sample1.txt6. Run AFL++:

afl-fuzz -i inputs -o outputs -m none -- ./test @@-i inputs: Input corpus directory.-o outputs: Output directory for crash logs.-m none: Disable memory limits (use cautiously).@@: Placeholder for input file.

7. Analyze Results:

- Check the

outputsdirectory for crash logs. - Example crash input: A long string causing a buffer overflow.

Real-World Use Cases

Fuzz testing is widely used in DevSecOps to secure various systems. Below are four real-world scenarios:

- API Security Testing:

- Scenario: A financial services company tests its REST API for vulnerabilities.

- Application: Use a fuzzer like

RESTlerto send malformed JSON payloads, identifying issues like SQL injection or DoS vulnerabilities. - Industry: FinTech, ensuring compliance with PCI-DSS.

- Network Protocol Testing:

- Scenario: A telecom provider tests its VoIP protocol implementation.

- Application: Use

boofuzzto fuzz SIP packets, uncovering buffer overflows or parsing errors. - Industry: Telecommunications, ensuring reliable service delivery.

- File Format Parsing:

- Scenario: A media streaming platform tests its video parser.

- Application: Fuzzing with AFL to test MP4 file parsing, identifying crashes due to malformed headers.

- Industry: Media, preventing exploits in user-uploaded content.

- Cloud-Native Microservices:

- Scenario: An e-commerce platform tests microservices in a Kubernetes cluster.

- Application: Deploy fuzzers as pods to test inter-service APIs, ensuring resilience against malformed inputs.

- Industry: E-commerce, maintaining uptime during high traffic.

Benefits & Limitations

Key Advantages

- Proactive Security: Identifies vulnerabilities before deployment, reducing attack surfaces.

- Automation-Friendly: Easily integrates into CI/CD pipelines for continuous testing.

- Broad Applicability: Works on binaries, APIs, protocols, and file formats.

- Cost-Effective: Automated fuzzing reduces manual testing efforts.

Common Challenges or Limitations

- False Positives: Some crashes may not indicate exploitable vulnerabilities.

- Resource Intensive: Requires significant CPU and memory for long-running tests.

- Coverage Limitations: May miss complex logic bugs not triggered by random inputs.

- Setup Complexity: Configuring fuzzers for specific applications can be challenging.

Best Practices & Recommendations

Security Tips

- Sanitize Inputs: Combine fuzzing with input validation to reduce false positives.

- Prioritize Targets: Focus on high-risk components (e.g., parsers, APIs).

- Use Sanitizers: Integrate AddressSanitizer (ASan) or UndefinedBehaviorSanitizer (UBSan) for better crash detection.

Performance

- Parallelize Fuzzing: Run multiple fuzzer instances to improve coverage.

- Optimize Input Corpus: Use representative inputs to reduce redundant tests.

- Monitor Resource Usage: Limit memory and CPU usage to avoid system overload.

Maintenance

- Regular Updates: Keep fuzzing tools and dependencies updated.

- Log Analysis: Regularly review crash logs to prioritize fixes.

Compliance Alignment

- Align fuzzing with standards like OWASP Top 10 or NIST 800-53.

- Document fuzzing results for audit trails (e.g., SOC 2 compliance).

Automation Ideas

- Integrate fuzzing into GitHub Actions or Jenkins for automated testing.

- Use cloud-based fuzzing services like Google’s OSS-Fuzz for scalability.

Comparison with Alternatives

| Aspect | Fuzz Testing | Static Application Security Testing (SAST) | Dynamic Application Security Testing (DAST) |

|---|---|---|---|

| Approach | Dynamic, input-based testing | Static code analysis | Dynamic, runtime testing |

| Strengths | Finds runtime vulnerabilities | Detects code-level issues | Simulates real-world attacks |

| Weaknesses | Resource-intensive, may miss logic bugs | Limited to source code access | Limited to exposed interfaces |

| Use Case | APIs, parsers, protocols | Early development, code review | Web apps, black-box testing |

| Automation | High (CI/CD integration) | High (IDE integration) | Moderate (requires environment setup) |

When to Choose Fuzz Testing:

- Use fuzzing for testing complex input-handling components (e.g., file parsers, network protocols).

- Prefer fuzzing when runtime behavior is critical, and source code access is available for coverage-guided fuzzing.

- Combine with SAST and DAST for comprehensive security testing.

Conclusion

Fuzz testing is a powerful technique for enhancing software security in DevSecOps by proactively identifying vulnerabilities through automated, dynamic testing. Its integration into CI/CD pipelines and cloud environments makes it indispensable for modern development workflows. As software complexity grows, fuzz testing will evolve with advancements like AI-driven fuzzing and broader cloud-native support.

Next Steps:

- Experiment with open-source fuzzers like AFL++ or libFuzzer.

- Integrate fuzzing into your CI/CD pipeline for continuous testing.

- Explore cloud-based fuzzing services for scalability.